How to Detect and Stop Impersonators in Your Community

Impersonation is one of the oldest tricks in the scammer playbook. But in today’s world of Discord servers, Telegram groups, online forums, and digital communities, impersonation has evolved into something far more scalable - and far more dangerous.

From fake “Administrators” offering urgent support, to copycat accounts mimicking moderators and community managers, impersonation is now a daily threat for online communities of all sizes.

In this guide, we’ll cover:

- What impersonation is

- What brand impersonation looks like in online communities

- Real-world examples of impersonation tactics

- Why manual moderation often fails

- How AI-powered tools like Watchdog automatically detect impersonators and stop scams before they spread

If you run an online community for your brand, this isn’t optional reading.

What Is Impersonation?

Impersonation is when someone pretends to be another person or authority figure in order to deceive others.

In online communities, impersonation typically involves:

- Changing a username to resemble an admin or moderator

- Copying profile pictures and display names

- Claiming to represent the team, support, or leadership

- Sending direct messages that appear official

The goal is almost always financial gain, credential theft, or manipulation.

Impersonation exploits one powerful psychological lever: authority.

When users believe they’re speaking to an admin, moderator, or official team member, they lower their guard.

That’s exactly what scammers count on.

What Is Brand Impersonation?

While impersonation can target individuals, brand impersonation targets organizations, companies, and communities.

Brand impersonation happens when someone:

- Pretends to represent your company

- Uses your logo or branding

- Claims to be “official support”

- Mimics your tone, formatting, or communication style

- Directs users to fake websites or phishing pages

In community platforms like Discord or Telegram, brand impersonation often overlaps with moderator impersonation.

For example:

- A scammer changes their nickname to “Support Team”

- They use your logo as their avatar

- They DM members claiming there’s a “security issue”

- They send a malicious link to “verify your wallet” or “confirm your account”

The damage can be severe:

- Financial losses for members

- Erosion of trust

- Public accusations against your brand

- Legal complications

Even worse, victims often blame the community—not the scammer.

Common Impersonation Tactics in Discord and Telegram

Let’s look at real-world patterns we see repeatedly in online communities.

1. Username Authority Hijacking

A scammer joins your server and changes their display name to:

- Administrator

- Admin

- Moderator

- Mod Team

- Community Manager

- Official Support

- Security Team

Even if their actual username differs, many platforms prominently display nicknames—so users see the authority title first.

In busy communities, this works shockingly well.

2. Slight Variations on Real Moderator Names

This is one of the most effective impersonation tactics.

If your moderator is:

DanielMartinThe scammer becomes:

DanlelMartin

DanieIMartin

Daniel_Martin

DanielMartln

DanielMartínAt a glance, the difference is nearly invisible.

Now combine that with:

- The same profile picture

- Similar bio

- Similar writing style

To the average user, it looks legitimate.

This is textbook brand impersonation within a community environment.

3. Direct Message Scams from “Staff”

Many communities warn members: “Admins will never DM you first.”

Scammers exploit this anyway.

They:

- DM new members immediately after they join

- Claim there’s a verification issue

- Claim suspicious activity on the account

- Offer “priority whitelist access”

- Provide fake support links

Because the message appears to come from “Admin” or “Support,” users comply.

4. Fake Security Alerts

Impersonators often create urgency:

- “Your wallet has been compromised.”

- “We detected suspicious behavior.”

- “You must verify in the next 10 minutes.”

- “Your account will be suspended.”

Fear overrides skepticism.

This is especially effective in crypto, trading, NFT, gaming, and investment communities.

5. Copycat Branding

Brand impersonation extends beyond usernames.

Scammers:

- Use your exact logo

- Copy your announcement formatting

- Repost legitimate messages with malicious links

- Create lookalike domains

If your brand is recognizable, you’re a target.

Why Manual Moderation Fails

Many communities rely on:

- Volunteer moderators

- Report-based systems

- Keyword filters

- Manual username checks

Unfortunately, impersonation is nuanced.

Consider this example:

“Hey, I’m Daniel from the mod team. We noticed your account has a problem.”

There’s nothing inherently offensive or profane here. Keyword filters won’t catch it.

And moderators can’t manually inspect every nickname change in real time—especially in large communities with thousands or tens of thousands of members.

Impersonators move fast.

They:

- Join

- Change nickname

- Send 10–50 DMs

- Leave

All within minutes.

By the time moderators react, the damage is done.

The Hidden Cost of Brand Impersonation

When impersonation happens, the harm isn’t just financial.

It impacts:

1. Trust

Members start asking:

- “Is this server safe?”

- “Are the admins legit?”

- “Why are there so many scams?”

Trust is hard to build and easy to lose.

2. Reputation

Victims often post publicly:

- On Twitter/X

- On Reddit

- In other Discords

- In reviews

They may blame the brand, not the scammer.

3. Moderator Burnout

Repeated scam waves create constant stress:

- Monitoring alerts

- Responding to victims

- Cleaning up messages

- Reassuring the community

Volunteer moderators burn out quickly under this pressure.

How AI Changes the Game

Impersonation detection requires more than keyword matching.

It requires:

- Username similarity analysis

- Authority title detection

- Context understanding

- Behavioral pattern recognition

- Real-time monitoring

This is where AI-powered moderation becomes essential.

Instead of reacting after reports, communities can proactively detect impersonators the moment they attempt deception.

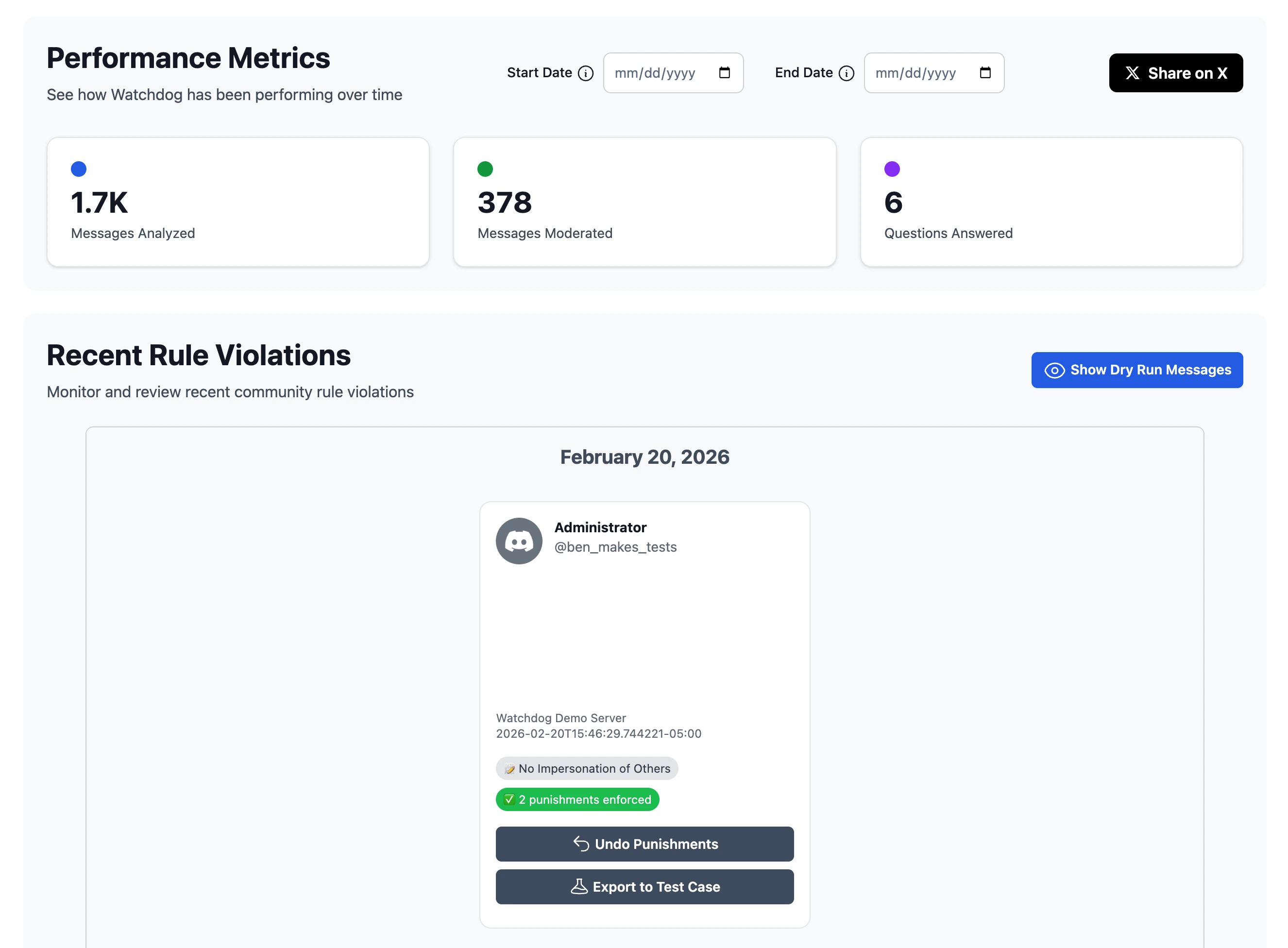

How Watchdog Automatically Detects Impersonation

Watchdog is built specifically to detect scams, impersonation, and rule violations in online communities.

Here’s how it tackles impersonation and brand impersonation at scale.

1. Authority Title Detection

Watchdog can flag users who:

- Change their nickname to “Administrator”

- Add “Mod” or “Admin” to their name

- Claim to be “Support” or “Security”

Even if there’s no profanity or obvious red flag, Watchdog understands the context of authority misuse.

2. Username Similarity Matching

Watchdog analyzes patterns like:

- Character swaps (l vs I)

- Underscore insertions

- Unicode lookalikes

- Accent variations

- Minor spelling deviations

So when someone tries to imitate:

CommunityManagerAlexwith:

CommunltyManagerAlexWatchdog can detect the similarity and flag it instantly.

3. Behavioral Red Flags

Impersonators often:

- Join and immediately DM multiple members

- Mention “verify,” “security,” or “urgent”

- Share suspicious links

Watchdog evaluates the context of the message and exactly what is being conveyed.

That’s critical because scams often rely on subtle persuasion and tricky wording rather than obvious spam.

4. Context-Aware Message Analysis

AI models inside Watchdog understand nuance.

For example:

- “I just drank a cup of java.”

- “I love programming in Java.”

Context matters.

Similarly:

- “I’m Daniel.”

- “I’m Daniel from the mod team. You must verify now.”

The second is a red flag.

Watchdog evaluates that difference automatically.

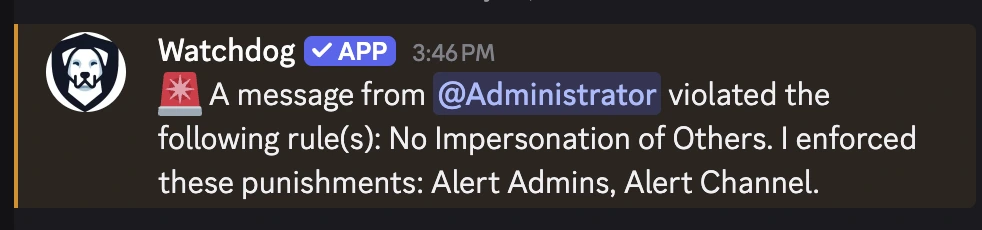

5. Real-Time Intervention

Instead of waiting for reports, Watchdog can:

- Flag the user

- Notify moderators

- Automatically remove messages

- Temporarily restrict permissions

- Log the violation

This happens in seconds—not hours.

Real-World Impersonation Scenarios (And How Watchdog Handles Them)

Scenario 1: The Fake Administrator

A user joins your Discord server and changes their nickname to “Administrator.”

They start DMing members about “account verification.”

What happens with Watchdog?

- Nickname authority misuse is detected.

- Suspicious language patterns are flagged.

- Moderators receive immediate alerts.

- The user can be auto-muted or removed.

Damage is minimized before dozens of members are targeted.

Scenario 2: The Copycat Moderator

Your real moderator:

SarahModScammer:

SarrahModSame profile picture. Same bio.

They start messaging users about a “limited-time airdrop.”

Watchdog:

- Detects high similarity to existing moderator usernames.

- Flags suspicious link behavior.

- Identifies authority claims in messages.

- Escalates instantly.

Without automation, this would likely go unnoticed for far too long.

Scenario 3: Brand Impersonation in Announcements

A scammer posts:

“Official Update: Click here to secure your account.”

The formatting mimics your real announcements.

Watchdog:

- Analyzes message tone and structure.

- Detects suspicious links.

- Identifies authority framing.

- Flags or removes the message before it spreads.

Why Every Growing Community Becomes a Target

If your community has:

- Active engagement

- Financial activity

- Recognizable branding

- Public visibility

You will eventually attract impersonators.

Scammers look for leverage.

And trust is leverage.

The larger your community grows, the more scalable impersonation becomes for attackers.

Prevention must scale too.

Impersonation Prevention Best Practices

Even with AI moderation, you should:

- Publicly state that staff will never DM first.

- Use visible role badges and verification markers.

- Lock down who can change nicknames in sensitive channels.

- Regularly remind members about scam patterns.

- Log impersonation attempts for internal review.

But understand this:

Education alone is not enough.

Scammers rely on speed and volume.

Automation is what levels the playing field.

Protecting Your Brand Before Damage Happens

Brand impersonation doesn’t just hurt victims—it weakens your authority.

If members repeatedly get scammed inside your community, they may:

- Leave

- Warn others

- Accuse leadership of negligence

Proactive impersonation detection shows that:

- You take security seriously

- You value member safety

- You protect your brand reputation

Using a system like Watchdog demonstrates operational maturity.

The Bottom Line

Impersonation and brand impersonation are not rare edge cases.

They are persistent, evolving threats in online communities.

From fake “Administrators” to nearly identical moderator usernames, scammers exploit authority, urgency, and trust to manipulate members.

Manual moderation cannot keep up with the speed and subtlety of modern impersonation tactics.

AI-powered systems like Watchdog provide:

- Real-time detection

- Context-aware analysis

- Username similarity matching

- Authority misuse flagging

- Automated enforcement

If you run a Discord, Telegram group, or online community, protecting against impersonation is foundational.

The question isn’t whether impersonators will target your community.

It’s whether you’ll detect them before your members pay the price.